In my first post, I wrote about several options to get my hands on a cluster. The most promising one seems to be using Amazon Web Services. But there is also another option that came to my mind:

Why not build a cluster using single-board computers like the Raspberry Pi?

I always wanted to play around with one of these boards as some of my friends and colleagues use them as media centers in their living rooms or for server tasks like file servers, routers or web servers. And I also wanted to do some practical crafting stuff besides research and programming. I’m fully aware of the fact that the performance of such a board is not comparable to a modern Intel x86-64 processor, but in my case, raw performance values do not have the highest priority, it’s more about evaluating scalability of distributed algorithms. Scalability is a relative measure which says something about how a system or algorithm behaves when you add more resources or input data to it. I will write a bit more about that topic in a future post. For now, the next step was to find out if there are already existing clusters built of single-board computers.

I started searching for single-board clusters and I was not disappointed. There were three projects that caught my attention. On the one hand, I was a bit sad, that a cluster with a similar approach already existed, but on the other hand it is kind of motivating that the idea can’t be so bad if someone else also had it. And just because such systems already exist, doesn’t mean that I can use them :)

40-node Raspberry Pi Cluster by David Guill

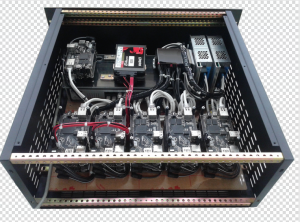

The first build is a distributed system with power, network and computing components inside a custom acrylic case. So let’s start with some pictures of that impressing project taken from the author’s website.

David had the exact same motivation for building a cluster: he needed a testbed for distributed algorithms as he has or had no access to a supercomputer or a distributed system. So here are his goals quoted from the website:

- Build a model supercomputer, which structurally mimics a modern supercomputer.

- All hardware required for the cluster to operate is housed in a case no larger than a full tower.

- Parts that are likely to fail should be easy to replace.

- It should be space-efficient, energy-efficient, economically-efficient, and well-constructed.

- Ideally, it should be visually pleasing.

According to the pictures, the description on the blog and the very nice YouTube videos, it seems that he reached all of his goals. He describes all steps in the process of building his cluster which is very nice to read and a great source of information. Especially the skills he acquired while building the cluster are an extra motivation for me.

The hardware specifications of his cluster are also very impressing:

- 40 Raspberry Pi Model B single-board computers

- 40 cores Broadcom BCM2835 @700 MHz

- 20 GB total distributed RAM

- 5 TB disk storage – upgradeable to 12 TB

- ~440 GB flash storage

- Internal 10/100 network connects individual nodes

- Internal wireless N network can be configured as an access point or bridge.

- External ports: four 10/100 LAN and one gigabit LAN (internal network), 1 router uplink

- Case has a mostly toolless design, facilitating easy hot-swapping of parts

- Outer dimenions: 9.9″ x 15.5″ x 21.8″.

- Approximate system cost of $3,000.

So far, David didn’t publish any benchmarking results on computing performance or power consumption for that cluster and I also couldn’t figure out what exactly he is doing with it. I hope that there will be more content soon. Nonetheless, this project is by far the most beautifully designed single-board computer cluster I could find and also a great source of inspiration for me.

32-node Raspberry Pi Cluster by Joshua Kiepert

The second cluster I looked into was built as a testbed for a dissertation related to the topic of data sharing in wireless sensor networks. So there was also the need for a distributed, cheap environment to run several experiments on. There is a 17-page PDF file containing a detailed description of the cluster components and even some evaluation results concerning power consumption and scalability. So here are some pictures of Joshuas cluster:

As you can see in the pictures, the cluster is arranged in four racks with eight PIs per rack inside a custom acrylic chassis. The PCB stand-offs are a very nice looking and cheap solution to organize the boards in racks. The switch and the power supply are not integrated in that chassis. Unlike in David’s build, there are no extra storage devices like HDDs or SSDs attached to the cluster. Here comes a summarized hardware list taken from the PDF:

- 33 Raspberry Pi Model B single-board computers

- 33 cores Broadcom BCM2835 @1000Mhz (overclocked)

- ~16 GB total distributed RAM

- ~264 GB flash storage (class 10 SD Cards)

- Cisco SF200-48 Switch 48 10/100 Ports (managed)

- Internal 10/100 network connects individual nodes

- 2x Thermaltake TR2 430W ATX12V (5V@30A)

- Approximate system cost of $2,000.

- 167 Watts maximum power usage

- ArchLinux ARM

Using a MPI program to calculate Pi, the author demonstrated the speedup of the cluster with increasing amount of physical nodes used for computation. The benchmarks were not I/O bound, so there was no impact by reading from or writing to the flash storage.

Joshua concluded that the Raspberry Pi cluster has proved quite successful and that he is doing his dissertation work exclusively on the cluster now. There is also a Git repository which contains documentation, bash scripts and some C examples for the cluster.

22-node Cubieboard Cluster by Chanwit Kaewkasi

The third cluster I found is called “Aiyara cluster” and was developed at the Suranaree University of Technology in Thailand. It’s a research project and the authors published an article about the cluster and their first experimental results at the ICSEC 2014. They sent me the paper, so I could have a look into the hardware specification and their experiments. Of the three presented clusters, this is the only one presented in an academic article including a specific use-case, benchmarks and detailed experimental results. There is also a short Wikipedia article and a Facebook page about the cluster. But let’s have a look at it first:

Chanwit and his team wanted to find out, if an ARM cluster is suitable for processing large amounts of data using the Apache Hadoop and the Apache Spark frameworks. So in contrast to Joshua’s cluster, this cluster is doing data-intensive jobs. In the experiments they used a 34 GB Wikipedia article file as input and calculated the most frequent words. Their use of Hadoop and Spark is very interesting for me, because as I wrote in my last article, I want to evaluate different graph processing frameworks, two of them being Apache Giraph and GraphX sitting on top of Hadoop and Spark respectively. In general, they wanted to find out how the cluster behaves with very data-intensive tasks, so the cluster was running in three different configurations to see how big the impact of local and distributed storage is:

- 22 Cubieboard single-board computers

- 22 cores ARM-Cortex A8 @1000Mhz

- 22 GB total distributed RAM

- Internal 10/100 network connects individual nodes

- Config 1: 2 SSD drives attached to 2 physical nodes

- Config 2: 20 HDD drives attached to 20 physical nodes

- Config 3: 20 SSD drives attached to 20 physical nodes

- Approximate system cost of $2,000 (Config 1).

- Linaro Ubuntu

In the article, the authors state that the raw performance of a single Cubieboard is around 2,000 MIPS (Dhrystone) and 200 MFLOPS (Whetstone), thus the whole cluster’s raw performance would be around 40,000 MIPS and 4 GFLOPS.

In Config 1, two SSDs are attached to two physical nodes running HDFS data nodes and also one Spark worker per node. The HDFS name node runs on the same physical node as the Spark Master. There are 20 Spark workers in total. Config 2 replaces the 2 SSDs by 20 HDDs each connected to a physical node and running a HDFS data node. In Config 3, the 20 HDDs are replaced by 20 SSDs.

Config 2 and 3 shall test the behaviour when data is locally available at a phyiscal node while Config 1 is stressing the network.

The authors concluded that they achieved a good result by demonstrating the cluster with Apache Spark over HDFS. The cluster with Config 3 could process 34 GB of data in approximately 65 minutes which leads to a processing throughput of 0.51 GB/min (66 mins, 0.509 GB / min in Config 2). In Config 1, the cluster consumed impressing 0.061 kWh during the benchmark and 0.167 kWh with 20 SSDs attached. Finally, Config 2 consumed twice the amount of energy which is due to the mechanical nature of HDDs. They also found out, that the cluster spends most of its time on I/O rather than computing tasks.

Those were the three projects I looked into in more detail. While the first one is my favorite in terms of crafting skills and design, the second and third one present experimental results and especially the third one uses framworks I want to look further into. Every single one of them motivates me to build my own cluster. The next step is to find a single-board computer which fits my needs and start playing around with it.

Of course, there are more projects and articles on ARM clusters, here is a list which I will update from time to time.

- Building and benchmarking a low power ARM cluster Master Thesis 2013 using Pandaboard and Raspberry Pi (2012)

- Iridis-pi: a low-cost, compact demonstration cluster 64-node Raspberry Pi Model B Cluster and LEGO (2013)